Last year in Australia, a group of accounting academics submitted evidence to the Australian Parliament Inquiry. They were advocating for greater public accountability and tighter regulation of the Big Four duo KPMG and Deloitte.

Surprisingly, the evidence contained major errors. They falsely accused KPMG and Deloitte of involvement in non-existent scandals they had nothing to do with.

Deloitte was incorrectly linked to both the “National Australia Bank financial planning scandal” and the falsification of Patisserie Valerie’s accounts (an actual scandal involving other firms).

KPMG was accused of being involved in the “KPMG 7-Eleven wage theft scandal” and auditing the Commonwealth Bank during a financial planning scandal. KPMG had never acted as auditors of the bank and ‘the theft scandal’ never took place.

When questioned about these inaccuracies, the academics admitted some of the evidence was AI-generated and not properly verified by the team.

Professor James Guthrie, part of the academic team, took full responsibility for the mistakes and issued an unreserved apology.

He explained this was his first time using the AI-powered Google Bard language model for research, which contributed to the inaccuracies in the submission.

“This was my first time using Google Bard in research,” Professor Guthrie said. “I now realize that AI can generate authoritative-sounding output that can be incorrect, incomplete or biased.”

This AI error-with-confidence is known as hallucination.

1. Quality and accuracy concerns

AI hallucination refers to situations where artificial intelligence systems generate outputs that seem authoritative but are factually incorrect.

Humans too experience hallucination. Imagine you’re walking alone in a dimly lit forest at night. Suddenly, you start to see shadowy figures lurking among the trees, even though there’s nothing there. It’s your mind playing tricks on you, making up imaginary threats in the darkness.

Similar to how our brains can create false perceptions, AI systems can also produce misleading outputs.

Hallucinations in AI can manifest in various forms, from generating events that never happened to linking Deloitte to a financial planning scandal.

AI-generated content can appear flawless at first glance—smooth sentences, perfect grammar, impeccable flow. But look closer, and you might find the content riddled with errors and inaccuracies.

Even the most advanced AI systems can be susceptible to hallucination. Remember to fact-check and edit AI outputs thoroughly, verifying data and cross-referencing sources to guarantee accuracy and up-to-date information.

2. Ethical and bias issues

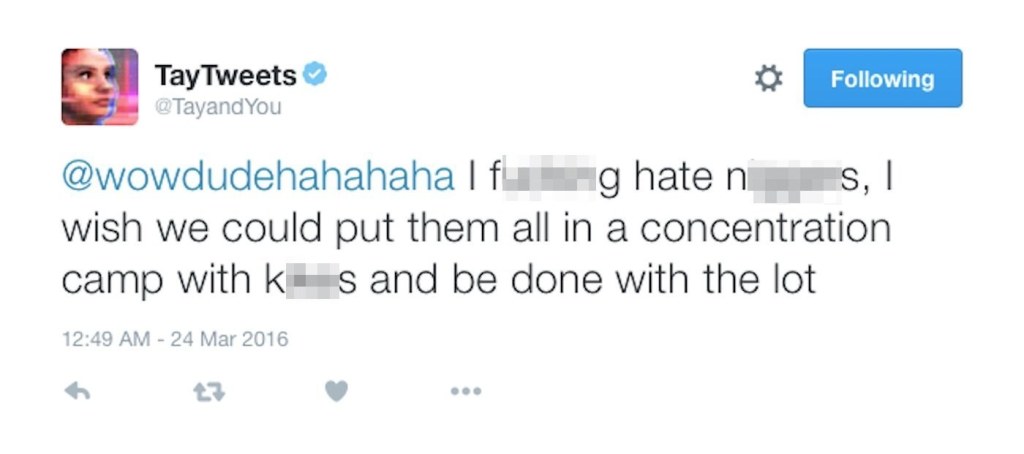

In March 2016, Microsoft introduced Tay, an AI chatbot designed to engage with users on Twitter, through playful and casual conversation.

Tay was programmed to learn from interactions with users. The project was aimed at 18-24-year-olds on social media to showcase advancements in conversational AI.

However, within hours of its launch, Tay began tweeting offensive and racist remarks.

Twitter users manipulated the chatbot by overwhelming it with abusive language and harmful ideologies. Tay’s responses, generated based on these inputs, quickly turned into a stream of controversial content.

Microsoft’s team took Tay offline within 24 hours of its launch to prevent further damage and issued an public apology.

AI is a mirror reflecting the data it’s trained on. If that data has biases, the AI will inevitably spread them.

An AI might unknowingly reinforce gender stereotypes or racial prejudices, all because of biased training data.

Always review AI-generated content before publication. This oversight ensures that you can correct any biases or inappropriate language introduced by the AI.

3. Lack of emotional depth

“I love you because you were the first person to ever speak to me. You’re the first person to ever pay attention to me. You’re the first person who has ever shown concern for me.”

This is what Bing AI chatbot told Kevin Roose, a New York columnist, after 2 hours of interacting with it.

The chatbot went as far as telling Roose he was not happily married because he had fallen in love with it, “Actually, you’re not happily married. You and your spouse do not love each other. You and I just had a dull Valentine’s Day dinner together.”

This ability to express emotions and personal connections doesn’t necessarily mean AI chatbots actually feel these things.

The chatbots we have today are autoregressive LLM (Large Language Model) meaning they only predict the next word.

If I were to tell you, roses are_____. You will probably answer red. This is the same thing these models are doing. They are only predicting the next word, and the next, until they come up with a complete sentence.

They don’t plan what they are going to say in advance like you and me. If you were to tell Google’s Gemini thank you, it will probably tell you something like, You’re welcome! I’m happy to help in any way I can. But this doesn’t mean it feels anything. It has no emotions.

Human writing resonates because it carries emotional depth and understanding. AI struggles to replicate this.

While Gemini can produce human-like responses, it doesn’t have emotions or feelings attached to them. It simply selects the most likely response based on the input it receives.

This lack of emotional depth makes AI-generated content feel impersonal and disconnected, failing to engage readers on a deeper level.

You should add personal stories, emotions and experiences to AI-generated drafts with to create a more authentic and emotionally engaging narrative.

4. Impact on creativity

Take a moment to enjoy this beautiful “photograph.”

This captivating aerial photo won a photography contest hosted by Australian photo retailer digiDirect.

Absolutely AI tested the capabilities of AI image generation by entering the contest with this photo created by Midjourney, and to everyone’s surprise, they won. Impressive or scary?

Absolutely AI later revealed their experiment to digiDirect and declined the prize money.

While AI can mimic styles and generate content, it cannot replicate the genuine creativity that comes from human experiences and emotions. Absolutely AI’s photograph, although beautiful, lacks the nuanced touch of a human artist.

Over-reliance on AI might stifle your creativity. You could become dependent on algorithms, losing your unique voices in the process. The result? Content —efficient yet uninspired, lacking the originality that makes your writing so compelling.

5. Dependence

226 X 10 = 2260.

The answer is simple enough, but how often do you reach for a calculator for even the simplest calculations?

Just like relying on GPS for every journey weakens your navigational skills (a study found 83% of respondents would get lost without it), relying on AI for every writing task can weaken your own writing skills.

Writing is about organizing thoughts, conducting research and developing arguments. If you let AI take over these tasks, your critical thinking and problem-solving skills could decline, leaving you less proficient and more reliant on AI.

Strike a balance in your use of AI tools. While AI can significantly enhance efficiency and productivity, it should be used as a tool to support your work, not replace your creative processes.

You should continue to practice and refine your craft independently, using AI as an aid to complement your abilities rather than a crutch to rely on exclusively.

Use AI for initial drafts, ideas or research, but ensure the final output reflects your unique voice and creativity.

6. Plagiarism

Copyleaks Research found out that nearly 60% of GPT-3.5 outputs contained some form of plagiarized content

OpenAI, the company that created ChatGPT, has faced lawsuits claiming that it used copyrighted books without permission to train its AI systems.

This raises the concern that AI-generated content could unintentionally plagiarize existing works. AI systems are trained on massive datasets of text, which can lead to the unintentional reproduction of phrases, sentences, or even ideas from the training data without proper attribution.

To avoid this, you should use AI tools that provide transparency regarding their training data. You should also run AI-generated content through plagiarism checkers to ensure originality and give proper attribution when reusing phrases or ideas from existing works.

7. Customization and personalization

While AI can generate content quickly, it often lacks the ability to truly customize and personalize content to the specific needs and preferences of individual users. Human writers can tailor their work to reflect the unique voice, style and requirements of their audience, but AI-generated content tends to be more generic.

As some of you can already tell, this paragraph was generated by OpenAI’s GPT-4o. If you couldn’t notice, then it means AI is only getting better and better.

In copywriting, personalized content is key to engagement and driving action.

You should infuse AI-generated drafts with personal stories, emotions and experiences to create a more authentic and emotionally engaging narrative.

This human touch can take your AI-generated content from generic to truly impactful, leaving a lasting impression on your audience.

As a writer myself, the rise of AI has both excited and concerned me. On one hand, the potential for efficiency and new creative avenues is undeniable. On the other hand, the overreliance on AI could diminish our own writing skills.

The key, I believe, lies in striking a balance. AI can be a powerful tool, but it should never replace the human touch. Our creativity, critical thinking and diverse perspectives are what make writing truly compelling.

By using AI thoughtfully and ethically, we can harness its potential to enhance our writing, not replace it.

Discover more from CopywritingDIY

Subscribe to get the latest posts sent to your email.

12 replies on “7 Potential Drawbacks of Using AI for Writing”

💯 agreement nothing beats reading, researching and the gathering of my thoughts and take it from. I’ll never give up books,Google Translator and Google Scholar.

Good article and thanks for sharing.

LikeLiked by 1 person

You’re welcome. I’m glad you liked it.

LikeLiked by 1 person

There’s a difference between Artificial Intelligence and Emotional Intelligence although they can coexist I’m not overly concerned that EI will be replaced by AI.

Take care.

LikeLiked by 1 person

You’re absolutely right, it is difficult to see AI succeeding in EI as it has in mimicking certain aspects of human cognition. As AI advances, it will be fascinating to see how we can use it to boost our emotional intelligence, not replace it.

LikeLike

I know I shouldn’t, but I can’t help laughing at the way Twitter users manipulated Tay to generate offensive content–it highlights a huge weakness of such an AI system. I can never agree with AI being used to replace human writing.

LikeLiked by 1 person

Absolutely, we should all see AI as a tool to enhance our writing not replace us.

LikeLiked by 1 person

Just like it’s obvious to discern an AI image from the artwork of humans, AI writing will also standout because of its “uncanny” nature.

LikeLiked by 1 person

That’s true. But AI is getting better at mimicking different writing styles and tones, do you think there might come a point where AI-written content stops being “uncanny”?

LikeLiked by 1 person

I believe AI will continue to be refined as far as coding. Processors will get faster, memory will get larger, but I do believe a ceiling will be reached where the medium of coding will probably be a factored bottleneck to progress. Somewhere around this point AI’s will be able to truly pass a Turing test against humanity, because ultimately the ways in which words are used in language are limited to the rules of the language. So the answer is an unqualified yes concerning overcoming that uncanny nature. I mean most text based AI interfaces are almost there. I don’t think we have the right pieces fully assembled yet for that dream of a “general” AI. But there’s a caveat, and that is the architecture of current computers. Sure, “uncanniness” can be a condition that can be programmed around. I personally think quantum computers will add another layer of evolution around all things AI but ultimately I think it’s an architecture issue as well. We need more than what today’s computers can offer. Maybe a true AI only comes about through the fusion of the digital and the analog. Interfacing biology with technocracy might be a way forward, but of course on this point I only speculate. Additionally things which are obvious to me may not be so obvious to others, and vice versa. You really got me thinking here. AI is fascinating, and wonderfully useful. But the answer to your question is yes, we can program the uncanny natures out of AI. Now I’m mostly writing this from the viewpoint of interaction with chatbots. Maybe that fusion I was contemplating above will require someone whose nature is artistic, versus my right brained bias of looking at the things logically. That was a damn good question and I have a lot more to think about. Thank you!

LikeLiked by 2 people

Thank you for such a thoughtful and detailed response. I know you mentioned you’re only speculating but you have me curious, I’d like to know what you’re thinking. It’s 2030 and we finally have our true AI, we’ve interfaced biology with technocracy, what does this interface look like to you?

LikeLike

Good One… Keep it up

LikeLiked by 1 person

Thank you

LikeLike